Abstract

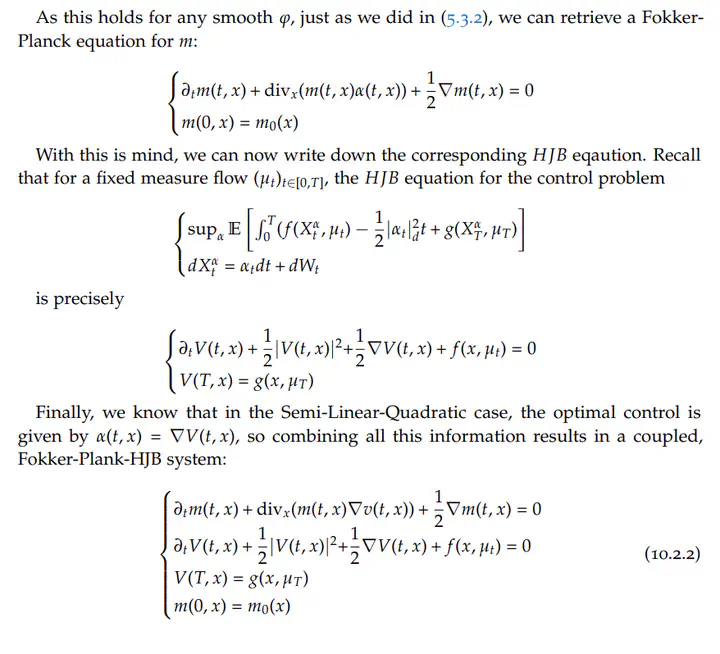

This document is a self-contained introduction to mean-field games. As the number of players grows, finding equilibria becomes more complex and often impossible, even with computational tools. Mean-field games seek to find an approximation to these equilibria inspired by mean-field theory, a tool widely used in physics to approximate the dynamics of large particle systems. This theory postulates that it is possible to predict a particle’s dynamics by focusing only on the statistical properties of the system instead of accounting for the individual interaction of each particle with another. By substituting particles with rational agents, the mean-field theory allows for a good approximation of Nash Equilibria in stochastic differential games. More precisely, the mean-field game theory reduces the problem of finding Nash Equilibria to solving a system of coupled partial differential equations: the Hamilton-Jacobi-Bellman equation representing player rationality and the Fokker-Plank equation condensing the system’s statistical properties. Several ideas from other mathematical branches such as stochastic optimal control, stochastic analysis, stochastic processes, and partial differential equations are used in building this theory.